Introduction

h Image to image Translation h The Unet

Model

h The Unet Generator Network

h The Markovian Discriminator h The Model Loss

Function h Conclusion

Definition 3.1

image to image translation is the controlled conversion

of an input image into a target image,image translation is a challenging task

that require a hand crafted lossfunction.[12]

?

3.1 Image to Image Translation

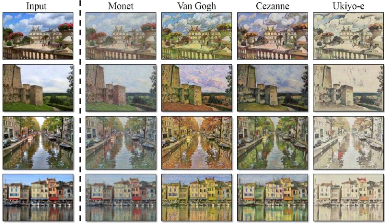

inspired by the language translation ,every scene can have

multiple representations such as grey scale,RGB, sketch etc the process of

translating an image into another domain is called style transfer 3.1

3.2 The U-net Model

Figure 3.1: style transfer / Source [25]

3.1.1 Pix2pix model

Definition 3.2

Pix2pix is GAN model designed for image to image

translation tasks,the architecture was proposed by Philip isola et al in their

2016 paper Image-to-image translation with conditional adversarial networks

[9],the pix2pix model is an implementation of the C-GAN where the generation of

the image is conditioned on a given image.

?

In the training process of Pix2pix model we give the

generator an image to condition the generation process. The output of the

generator is next fed to the discriminator along with the original image we fed

to the generator, next we provide the discriminator with a pair of real images(

original and target image) from the data set. The discriminator is suppose to

distinguish real pairs from fake pairs and the generator is suppose to fool the

discriminator hence the adversarial nature of the model.

· Note In a Pix2pix model exists

two loss functions,the adversarial loss and the L1 loss ,this way we don't only

force the generate to produce plausible images for the target domain ,but also

to generate images that are plausible as a transformation of the original

image.

L1 loss is the mean absolute difference between the

generated image and the expected image

Theorem 3.1

Xi= 1 i=n

|àyi - yi| (3.1)

?

22

23

3.2 The U-net Model

3.2 The U-net Model

First introduced by Philip isola et al in their paper

Image-to-image translation with conditional adversarial networks in 2016 [9]

,The U-net ?? is an implementation of the Pix2pix model where the generator in

a U-Net model and the discriminator is a Markovian discriminator also known as

a patch GAN ,this network proved superior performace on the image to image

translation tasks,

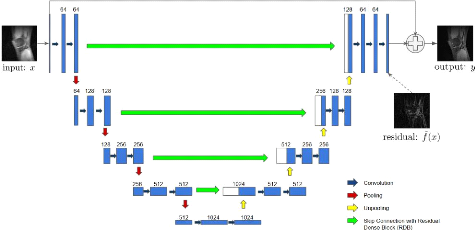

3.2.1 The Unet-Generator Model

U-Net is a model 3.2 first build for semantic segmentation.

It consists of a contracting path and an expansion path. The contracting path

is a typical architecture of a convolutional network. It consists of the

repeated application of two 3x3 convolutions, each followed by a ReLU and a 2x2

max pooling operation with stride 2 for downsampling. At each downsampling step

we double the number of feature channels. Every step in the expansive path

consists of an upsampling of the feature map followed by a 2x2 convolution that

halves the number of feature channels, a concatenation with the correspondingly

cropped feature map from the contracting path, and two 3x3 convolutions, each

followed by a ReLU. At the final layer a 1x1 convolution is used to map each

64-component feature vector to the desired number of classes. In total the

network has 23 convolutional layers. [1]

Figure 3.2: unet / Source[1]

· Note You can notice a similarity

between the U-net generator and a Encoder network ,the difference is the skip

connection between the down-sampling and the up-sampling layers. To gain

further intuition on why using a U-Net as a generator for an image to image

translation

|