19

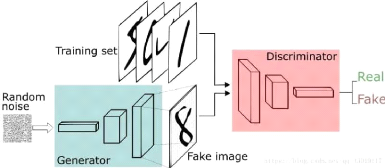

2.3 Generative Adversarial Networks

2.3.2.1 The Generator Model

for the generator: in order to train the generator we would need

to combine the two models ,the generator generates the image ,the discriminator

must classify it as fake ,if so ,it updates the generator

weights in the target of creating fake samples indistinguishable

from the samples in the data set.

2.3.2.2 The Discriminator Model

the discriminator takes an image from the data set with label 1

with the target of classifying as real,if the discriminator fails it updates

the weights.

Figure 2.5: basic GAN architecture /

Source[1]

· Note GANs differfrom traditional

neural networks, in a traditional neural networks there is usually one cost

function represented in terms of it's own parameters J(e) ,in contrast in GANs

we have two cost functions one for the generator,the otherfor the discriminator

,each of these functions are represented in terms of both the networks's

parameters, J(G)(e(G), e(D)) for the generator

and J(D)(e(G), e(D)) for the discriminator.

the other difference is that traditional neural networks update all the

parameters e in the training cycle ,in a GANs there a training cycle for the

generator and another for the discriminator in each cycle each network updates

only it's weights that means the network updates only a part of what actually

makes it's loss.

2.3.3 Generative Adversarial Network Architectures

Even though GAN are a new technology the amount of research

that was put into it is huge ,that did lead to the birth of new advanced

architecture for various applications,here is a list for some of these

applications:

Vanilla GAN :

Deep convolutional GAN : since the Ian Goodfellow paper

,there have been a lot of attempts to fuse CNN as part of the GAN architecture

, the reason being CNNs are superior when it comes

20

2.3 Generative Adversarial Networks

to visual tasks , until Radford et al succeded in 2015 in their

paper Unsupervised representation learning with deep convolutional generative

adversarial networks [17], they used a CNN as both a generator and a

discriminator ,here are some guide lines into the implementation of DC-GAN:

strides are preferred over pooling layers in both the

generator and the discriminator. Batch normalization should be used in both the

generator and the discriminator.

For deep architectures ,fully connected layers should be

removed.

For the generator use ReLU activation function expect for the

last layer it's preferred to use Tanh rather than sigmoid ,the reason being

that images are normalized between (-1, 1) not (0,1).

Conditional GAN: it's a type of GAN introduced by Montreal

university student Mehdi Mirza and Flickr Al , where the the generator and the

discriminator are conditioned with an information,this information can be a

anything, a label,set of tags,a written description etc 2.6 .

Figure 2.6: conditional gan architecture /

Source [1]

· Note in the scope of the

explanation of the C-GAN,we will consider the auxiliary information to be a

label,just for simplicity.

Stack GAN: the translation of a text to a image is a

challenging task ,the GAN architecture built for this task is called Stack GAN

short for stacked generative adversarial networks ??

introduced is the paper StackGAN:Text to Photo realistic image

synthesis with stakced

generative adversarial networks,this network is composed of

two GANs stacked on top of each other ,each GAN has a specific role in the

creation of the image,the process can be described by the two stages bellow:

Stage 1:turn the text to a primitive sketch of the image

Stage 2: translating the sketch to a full realistic looking

image

Super Resolution GAN : the task of augmenting an image into a

high resolution image ?? is realized using an architecture

called SR-GAN short for super resolution generative adversarial networks

?? introduced in the paper photo-realistic single image

super-resolution using generative adversarial networks []

3

The Pix2Pix Model

|